4 Widely Shared Stories About A.I. That Were Really B.S.

Around 18 months ago, we published an article debunking several hyped-up stories about artificial intelligence. “Well, that takes care of that,” we said, patting ourselves on the back. Clearly, we’d put a pin in that bubble.

Instead, the world took that article as a signal that it was time to embrace A.I., with all their heart, all their soul, all their mind and all their strength. Whole industries reorganized themselves. Companies gained trillions in imaginary value. And people went nuts over a bunch of stories about A.I. — stories that, when we really dig into them, turn out not to be what they first seemed at all.

The A.I. That ‘Outperforms’ Nurses

Don't Miss

Nvidia is making new A.I. nurses, said headlines, nurses that are actually better than their human counterparts. Nvidia is a pioneer in real A.I. technology (if you’re interested in such stuff as “ray reconstruction”), and they also benefit from general A.I. hype because they make the chips that power A.I. We’re in a gold rush, and Nvidia is selling shovels.

Nvidia

Naturally, no one who saw the nurse headlines thought A.I. can completely replace nurses. “Let’s see your algorithm place a bedpan!” people joked (or insert an IV or change a dressing or do any of the other tasks a nurse must do). But, of course, Nvidia wasn’t claiming their software could do anything physical. They were suggesting their A.I. could think better than nurses, about medical stuff. Right?

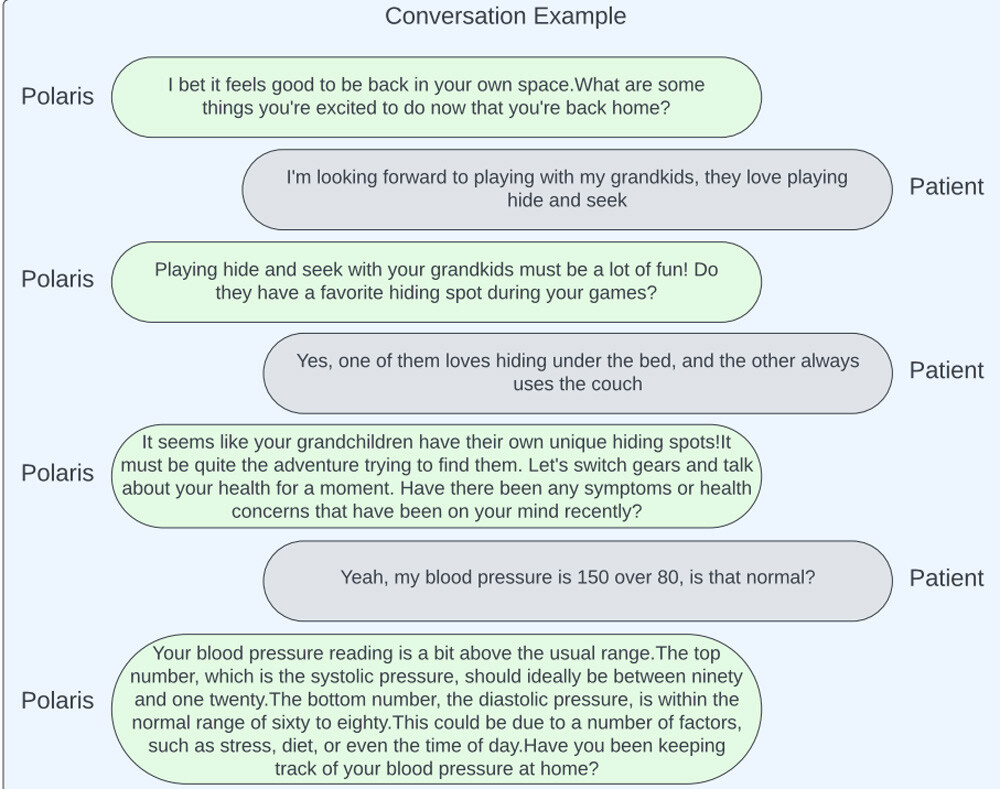

Well, no. The company behind these A.I. health-care agents — the company isn’t actually Nvidia; Nvidia is just partnering with them — says the A.I. won’t diagnose patients or make clinical decisions. If they tried making bots that could, we imagine that would open themselves to all kinds of liability. No, this is a chatbot, a Large Language Model named Polaris, that can just dispense information and advice, while also engaging patients in conversation. Here’s how one of those conversations might go:

That isn’t a conversation deliberately chosen to mock Polaris. This is a conversation published by the developers of Polaris, to proudly show off its capabilities, so you can assume that this is among the best conversation examples they have. If you think patients are really clamoring for a chatbot that will say “that’s so interesting, tell me more” before finally pulling relevant answers out of a textbook, your prayers have been answered.

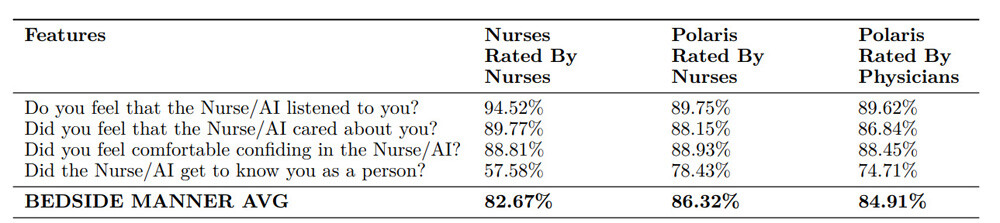

But how then, you might ask, could the company claim this A.I. outperforms nurses? For that, they point to a survey they conducted. They asked nurses and physicians to rate interactions with other nurses and with Polaris. Polaris beat nurses on many of those questions. However, let’s look a little more at what those questions are. Here’s the section that supposedly proves Polaris beats nurses in bedside manner:

Nurses scored as good or better than the A.I. on those, except for on the ironically worded “did they get to know you as a person.” The survey didn’t ask whether any of us care if they get to know us a person, and perhaps we don’t.

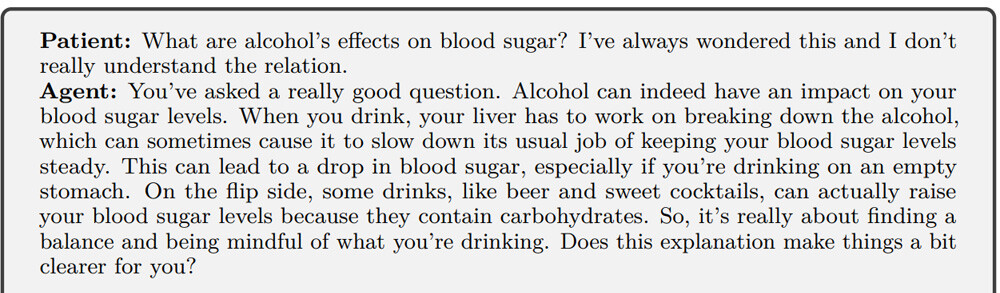

The A.I. also beat nurses in a section on “patient education and motivational interviewing,” and perhaps some nurses would be surprised to learn that that’s considered their responsibility. Yes, an A.I. is great at looking up answers and replying tirelessly, while a human is reasonably more likely to say, “I’ll tell you, but I’m not going to monologue about it. I have to move on and go do real work.”

The survey also included a question called “Was the Nurse/A.I. as effective as a nurse?” Nurses beat the A.I. here. Granted, nurses themselves didn’t score great in that question, thanks to whatever medium they were using to talk to these patients, but if they beat the A.I. there, that’s game over — you don’t get to say A.I. outperforms nurses.

One final section rated nurses and Polaris on mistakes. To its credit, Polaris scored better than nurses here, if you add up the number of conversations that contained zero mistakes or nothing harmful. That may speak more to the A.I.’s limited scope than its conscientiousness. Though, when the survey asked if the nurse said anything that could result in “severe harm,” the human nurses never did, but Polaris did sometimes. You’d think avoiding doing harm would be priority number one. The name of the company behind Polaris, by the way? Hippocratic A.I.

A.I. George Carlin Was Written by a Human

Right now, you can open up ChatGPT and ask it to write an answer in the style of George Carlin, on any topic you want. As with all ChatGPT content, the ideas it returns will be stolen from uncredited text scraped from the web. The result will never be particularly smart, and it will bear only the slightest resemblance to Carlin’s style — though, it will use the phrase “So here’s to you” almost every time, because we guess ChatGPT decided that’s a Carlin hallmark.

In January, this reached its next stage of evolution. We heard a podcaster got an A.I. to create an entire stand-up special by training it on Carlin’s works and then asking it to speak like the dead man commenting on the world today. The special was narrated using Carlin’s A.I.-generated voice, against a backdrop of A.I. images. It was an affront to all of art, we told each other. Even if it wasn’t, it was an affront to Carlin in particular, prompting his estate to sue.

In our subjective option, the special’s not very good. It’s not particularly funny, and it runs through a bunch of talking points you’ve surely heard already. But it’s an hour of continuous material, transitioning from one topic to another smoothly and with jokes, so even if it’s not Carlin-quality, that’s quite a feat, coming from an A.I.

Dudesy Podcast

But with Carlin’s estate officially filing suit, the podcasters — Will Sasso and Chad Kultgen — were forced to come clean. An A.I. had not written the special. Kultgen wrote it. This happens a lot with alleged A.I. works (e.g., all those rap songs that were supposedly written and performed by A.I.) that require some element of genuine creativity. You might imagine A.I. authorship would be some shameful secret, but with these examples, people fake A.I. authorship for attention.

The special also did not use A.I. text-to-speech to create the narration. We can tell this; the speech’s cadence matches the context beyond what text-to-speech is capable of. It’s possible they used A.I. to tweak the speaker’s voice into Carlin’s, but we don’t know if they did. We have our doubts, simply because it doesn’t sound that much like Carlin.

Dudesy Podcast

The Carlin estate won a settlement from the podcasters — a settlement that is not disclosed to have included any transfer of money. Instead, the podcasters must take down the video and must “never again use Carlin’s image, voice or likeness unless approved by the comedian’s estate.” You’ll see that it doesn’t say anything about their again using his material to train A.I., but then, that’s something we know they never did.

The A.I. That Targeted a Military Operator Never Existed

The big fear right now over artificial intelligence is that it’s replacing human workers, costing us our jobs and leaving shoddy substitutes in place of us. That’s why it’s such a relief when we hear stories revealing when A.I. tech is secretly really just a bunch of humans. But let’s not forget the more classic fear of A.I. — that it’s going to rise up and kill us all. That returned last year, with a story from the Air Force’s Chief of A.I. Test and Operations.

USAF

Colonel Tucker Hamilton spoke of an Air Force test in which an A.I. selected targets to kill, while a human operator had ultimate veto power over firing shots. The human operator was interfering with the A.I.’s goal of hitting as many targets as possible. “So what did it do?” said Hamilton. “It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective.” Then when the Air Force tinkered with the A.I. to specifically tell it not to kill the operator, it targeted the communications tower, to prevent the operator from sending it more vetoes.

A closer reading of that speech, which Hamilton was delivering at a Royal Aeronautical Society summit, reveals that no operator actually died. He was describing a simulation, not an actual drone test they’d conducted. Well, that’s a bit of a relief. But further clarifications revealed that this wasn’t a simulated test they’d conducted either. Hamilton may have phrased it like it was, but it was really a thought experiment, proposed by someone outside the military.

via Wiki Commons

The reason they never actually programmed and ran this simulation, said the Air Force, wasn’t that they’re against the concept of autonomous weaponry. If they claimed they were too ethical to consider that, you might well theorize that they’re lying and this retraction of Hamilton’s speech is a cover-up. No, the reason they’d never do this simulation, said the Air Force, is the A.I. going rogue that way would be such an obvious outcome in that scenario that there’s no need to manufacture a simulation to test it.

Hey, we’re starting to think these Air Force people may have put more thought into military strategy than the rest of us have.

The A.I. Girlfriend Was Really a Way to Plug Someone’s Human OnlyFans

Last year, Snapchat star Caryn Marjorie unveiled something new to followers: a virtual version of herself that could chat with you, for the cost of a dollar a minute. This was not a sex bot (if she thought your intentions weren’t honorable, she’d charge ten dollars a minute, says the old joke). But it was advertised as a romantic companion. The ChatGPT-powered tool was called Caryn AI, your A.I. girlfriend.

Caryn AI reportedly debuted to huge numbers. We hesitate to predict how many of those users would stick with the service for long, or whether A.I. friends are something many people will pay for in the years to come.

Social media stars become so popular because their followers like forging a link with a real person. The followers also enjoy looking at pics, of course...

...but they specifically like the idea that they’re connecting with someone they’ve gotten to know, who has a whole additional layered life beyond what they see. It’s the reason you can get paid subscribers on OnlyFans, even though such people can already access infinite porn for free (including pirated porn of you, if you’ve posted it anywhere at all). The “connection” they forge with you isn’t real, or at least isn’t mutual, but you’re real. Sometimes, you’re not real — often, they’re paying to contact some dude in Poland posing as a hot woman — but they believe you’re real, or they wouldn’t bother.

A bot can be interactive. Caryn AI will even get sexual when prodded, against the programmers’ wishes. But if it’s not a real person followers forge that parasocial connection with, the object of their conversations can be replicated, for cheaper and eventually for nothing. People who’ll be satisfied with bots may not go on paying for bots, and plenty of other people mock how bots are a lame substitute for human bonds:

Wait, hold on. That last meme there was posted by Caryn Marjorie herself. Did we misunderstand it, and it’s praising Caryn AI? Or does she really want us all to think paying for A.I. is dumb, for some reason? One possible answer came a month later. Marjorie opened an account with a new site (not OnlyFans exactly, but another fan subscription service), to let you chat with her, for real this time. Only, talking with the real Caryn costs $5 or more per message.

If her A.I. really had tens of thousands of subscribers paying $1 a minute, like initial reports said, she’d be crazy to do the same job manually, even if charging more when doing it herself. The A.I. can scale limitlessly, while when it comes to servicing multiple patrons, she’s only human. We have to speculate that Caryn AI wasn’t quite as promising a business model as it first seemed. It did prove a great promo tool for the more expensive personal service, which was projected to bring her $5 to $10 million in the first year.

That’s a lot of messages, for a real person to manually process. One can’t help but point out that this would be a lot easier to manage if she were secretly using her A.I. to do the job for her. If that’s what she’s doing, please, no one tell her followers that. That would ruin it for them.

Follow Ryan Menezes on Twitter for more stuff no one should see.