5 Real World Problems That Are Straight Out Of Black Mirror

The future! Rocket ships, lasers, robots -- it is truly a far-flung, fantastical place. Except, uh ... we have all those things right now, and have for some time. The future isn't some vague, to-be-determined period of existence; it's literally tomorrow. So today, humanity has to address issues that would have been inconceivable a few paltry years ago. And frankly, some of this stuff still kind of sounds like someone got stoned and then tried to pitch a Black Mirror episode.

Fitbits Are Giving Away Military Intelligence

Nowadays it's routine for people to wear a fitness tracker, but by allowing our data to be shared, we're also allowing our habits to be shared. That normally shouldn't be problematic, unless your spouse is learning that your weekly jog takes you straight to the strip club ... or you're exercising on a classified military installation.

Thanks to a map that shows the jogging habits of the 27 million people who use Fitbits and the like, we can see splotches of activity in otherwise dark areas, like Iraq and Syria. Some of those splotches are known American military sites full of exercising soldiers, and some, by extrapolation, are sites that the military would rather keep unknown. One journalist saw a lot of exercise activity on a Somalian beach that was suspected to be home to a CIA base. Someone else spotted a suspected missile site in Yemen, and a web of bases in Afghanistan were also revealed.

By analyzing the data, you could theoretically figure out patrol and supply convoy routes, and make educated guesses as to where on these bases soldiers eat, sleep, etc. That's a lot of useful information for someone planning an attack. You could also track individuals, potentially important ones. One researcher claimed they tracked a French soldier's entire overseas deployment and subsequent return home.

This wasn't an evil ploy by a terrorist cell in league with Big Fitness; you can turn that data tracking off. It's just that no one even thought about it until someone finally pointed out that it was a huge security issue. American rules for fitness trackers in the military are now being "refined," which we assume is PR speak for "Goddammit, turn that shit off." But it's only a matter of time until another seemingly innocuous technology accidentally gives away state secrets.

Space Commercialization Might Contaminate Planets

Elon Musk set a new precedent when he launched a car into space, and not only for tacky egotism. The rules about what corporations can and can't do in space are essentially nonexistent, because the government's authority ends somewhere around the thermosphere. Governments, however, have legal responsibilities listed in the Outer Space Treaty -- one of the few things America and the Soviet Union agreed on. Most of the world has signed as well, and in addition to promising not to put nukes on the Moon or claim all of Jupiter for the proud people of Denmark, adherents agree not to send Earth germs to other planets like the interplanetary version of coughing on the guy next to you at the movie theater.

That sounds a bit silly, but there's a real point: If Earth microbes accidentally end up on other planets and moons, it becomes impossible for scientists to tell if their "discovery" of life on Io is native, or if it originated from someone sneezing in a Tesla factory. So NASA and other government space agencies follow a strict anti-contamination protocol. American Mars rovers, for example, had all of their parts heated to 230 degrees before launch, and they are routinely sterilized with alcohol. Even if your mission is only to orbit a planet (or swing by one), you have to prove that the odds of an accidental crash landing are equivalent to that of winning a fair-sized lottery.

In theory, governments are also responsible for ensuring that any corporations within their borders follow the same rules. But once you move beyond launching satellites into Earth's orbit, the government's ability to enforce the law is about equal to your ability to enforce a responsible bedtime on yourself. Maybe that flying Tesla was carefully sterilized, or maybe Musk went out of his way to fart it up before launch. We don't know. And as more and more corporations talk about going to the Moon and Mars, we may have a germ problem.

There's also the issue of debris. While we like to think of space as a pristine void, the Solar System is starting to resemble a freshman's dorm room. Space missions are supposed to be as clean as possible, and a mission to another planet should either purposely burn up in the atmosphere or land when it's done. Musk's car was heading toward Mars, where plans for it were sort of a vague shrug. It could have eventually broken up and left debris around the planet, or it could have infected the surface. But instead, it went off-course toward the asteroid belt ... where it could also very well hit something and break up. Worst-case scenario, we end up with a bunch of junk floating around that could take out a future mission. Even if his car never hits anything, Musk still broke bold new ground in space litter.

Moderators Have To Watch All The Heinous Garbage That Gets Posted On Social Media

Try to imagine the worst job possible. Sewage sampler? Elephant masturbator? How about social media moderator? It sounds like a joke at first: "Facebook has moderators? Then explain all the crap I see every day!" Then you learn that their job is mostly to filter out pornography, and it sounds awesome. Aren't you supposed to get paid to do what you love?

But then you learn about the truly awful shit that moderators see as they cruise through a thousand flagged posts an hour, and you want to give them all hugs and raises. Child pornography, bestiality, hate speech, extreme violence ... if you can imagine something awful, someone has put it online. Specific examples included a man's testicles getting crushed, a boy getting his legs mangled by a truck, someone getting hit by a train, a man shooting himself in the head, suicide bombings, a man hurting and possibly killing small birds by having sex with them, and a woman whose body had been blown in two. Imagine dealing with images like that for 40 hours a week. It's like playing roulette, except the closest you get to winning are shots of consenting genitals smashing together.

Imagine being forced to watch Logan Paul videos and considering that a good day.

Over 100,000 people trawl through e-trash to keep Google, Facebook, YouTube, Twitter, and other major sites (relatively) safe to use. And you can't click away the moment you can tell a video is getting nasty -- you have to verify that the content is real, and learn as much as possible so you can try to destroy it at its source. And while you will become somewhat numb, dealing with the worst of what humanity has to offer day after day can haunt you. Turnover is high, and there are few resources for moderators who need counseling. Which, shit, has to be all of them, right?

Facebook's Fake News Problem Is A Feature, Not A Bug

Despite the fact that you probably took at least one break from reading this to check your Facebook feed, we still think of the site primarily as a vehicle for vacation photos where the worst thing that could happen is getting into a bitter argument with some friends about how to pronounce "GIF." We're all too smart to get suckered into politics, right?

But Facebook's politics come after you. Ten million users saw "Russian-linked" ads placed during the 2016 election, mostly focused on big, controversial issues like immigration and gun control. Facebook also admitted that they placed about $100,000 in ads from "inauthentic accounts." The issue isn't ads spamming "Vote for Clinton / Trump / X'algax, Destroyer of Souls!" Everyone already saw those a million times; they'd sway no one. The problem is that they spread stories like "FBI AGENT SUSPECTED IN HILLARY EMAIL LEAKS FOUND DEAD IN APPARENT MURDER-SUICIDE," which linked to a fake newspaper, quoting a man that doesn't exist, who lives in a town that doesn't exist (they spelled the town's name wrong).

If you see that stuff in your feed, wedged in between a cat video and your friend's new spaghetti sauce recipe, you don't click through to verify it. So it weasels into your brain as something you vaguely remember that may or may not be true.

Facebook has also become a playground for trolls, regardless of whether they have a political agenda or just want to watch the e-world burn. If you can think back to the Las Vegas shooting (before all those other shootings removed it from the headlines), a slew of hoaxes spread from the moment the news broke. Some people invented fake dead and missing victims solely to see how many likes they could get. Others claimed that the shooter was still active, invented fake perpetrators, assigned nonexistent motivations to the shooter, or claimed that he was a Democrat, a left-wing activist, or a recent convert to Islam (in reality, if the shooter had any political motives, he took them to his grave).

It's the cruelest and most devious form of misinformation, because it's hard to keep your bullshit detector functioning when you're in shock. Maybe some of those moderators could get a well-deserved break from the animal torture to focus on this crap instead?

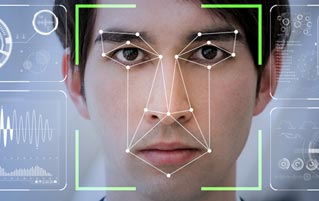

Someone Could Steal Your Face And Make Porn

We have the technology to swap someone's face onto someone else's face in a video. That's fun if we're putting Nicholas Cage into Raiders Of The Lost Ark ...

... but it's a problem if someone is making it appear that a person said or did something they didn't really do. And that problem gains an extra level of creepiness when someone's face is slapped into a porn video. All it takes is some training, some raw footage of the subject, and a few spare hours. And if you're the sort of person inclined to make fake porn, you've probably got a lot of time on your hand.

Reddit had an entire community dedicated to this "hobby," until it was shut down, but that only made enthusiasts migrate elsewhere. Called "deepfakes," after the Reddit user who pioneered the practice, they started editing the faces of celebrities onto preexisting porn. Some of the fakes ended up on porn sites being pitched to viewers as real, because porn is now a genre of fake news.

While it's unlikely that anyone would believe Taylor Swift was suddenly so hard up for money that she appeared on FuckBrothers.biz, it's still an ethically off-putting mess. It's not limited to the living. Someone made a video "starring" a young Carrie Fisher. And it's not limited to celebrities, either. Anyone armed with a scraper can pull photos from Facebook and Instagram, combine them with any of several search engines that look for porn stars by facial recognition, and make a fairly convincing video of anyone doing pretty much anything. Reddit users were making videos of their friends, co-workers, classmates, and exes. They were "only" for private use, but what happens when someone wants to manufacture revenge porn? So there you go: We're reaching a point in history where we can't even trust our pornography. And then what's left to believe in?

Mark is on Twitter and has a book.

Support Cracked's journalism with a visit to our Contribution Page. Please and thank you.

For more, check out 5 Ways The Modern World Is Shockingly Ready To Collapse and 22 Everyday Problems Caused By Famous Sci-Fi Technologies.

Follow us on Facebook, and we'll follow you everywhere.